Why Consumer Messaging Apps Are a National Security Risk in the Age of AI

Secure Internal Communication Is a Sovereignty Issue for Governments

There is a quiet assumption embedded in modern government life that no longer holds.

The assumption is that communication is neutral — a utility rather than a strategic asset. That messages are merely containers for information, not signals in themselves. That as long as sensitive facts are not explicitly shared, nothing of consequence is revealed.

Artificial intelligence has shattered that assumption.

In the age of AI, communication is no longer just what is said. It is how, when, to whom, in what sequence, and under what pressure. These dimensions, once invisible or too costly to analyze at scale, are now the primary source of strategic intelligence. And they are generated continuously by consumer messaging applications that were never designed for sovereign use.

This is why secure internal communication in government is a national security imperative.

A Simple Question Governments Rarely Ask (But Should)

For decades, governments treated communication tools as replaceable conveniences. Phones, email, messaging apps — these were considered neutral pipes through which policy happened elsewhere. Security was something applied at the edges: encryption, access controls, network protections. As long as the pipe was “secure enough,” attention moved on.

That worldview belonged to a pre-AI era.

Today, adversaries do not need to read your messages to understand you. They need only observe the patterns your communication creates. Artificial intelligence excels at pattern recognition, correlation, and prediction. It turns timing into intent, frequency into urgency, silence into hesitation. It does not eavesdrop in the traditional sense; it models behavior.

And consumer messaging apps are perfect behavioral sensors.

Consider a familiar situation. A senior minister receives a message late in the evening, followed minutes later by a small cluster of replies from the same circle of advisors. The conversation is brief, informal, and cautious. No classified information is exchanged. The next morning, a public position is announced that closely mirrors the direction implied by that exchange.

To the participants, this feels innocuous. To an AI system observing the metadata — timing, participants, response latency, historical patterns — it is a training example. Repeated often enough, it becomes a model.

Why “End-to-End Encryption” Is No Longer Enough

Many senior officials still ask the same question when communication security is raised: “But isn’t it encrypted?”

It is an understandable question — and the wrong one.

Encryption protects content during transmission. It does not protect context. It does not obscure who initiated contact, how quickly others responded, whether communication surged before a decision or stopped afterward. It does not prevent metadata from being generated, retained, analyzed, or correlated. It does not prevent operating systems, cloud services, or AI-powered features from interacting with communication data outside the encrypted channel.

In short, encryption prevents reading. AI does not need to read.

This is the conceptual gap that has left governments exposed. Leaders believe they are protected because messages cannot be intercepted in transit, while adversaries extract far more valuable intelligence by observing communication ecosystems from the outside.

How AI Turned Communication Into Strategic Intelligence

To understand why this matters, consider how modern AI systems operate. They are not reactive tools waiting for explicit commands. They are predictive engines trained to anticipate outcomes. When applied to political and institutional environments, they ingest enormous volumes of data — public statements, leaked documents, social media, scheduling information, and, critically, communication metadata.

What makes this especially dangerous is not accuracy in isolation, but accumulation. AI models do not need to be perfect to be useful. They only need to be directionally right often enough to begin shifting advantage. Over time, small behavioral signals compound into increasingly reliable forecasts. This is how uncertainty — the traditional shield of statecraft — erodes quietly.

Why Leadership Communication Became the Primary Target

The focus on senior leaders is not accidental. A successful manipulation at that level produces immediate, outsized impact. A single fraudulent authorization, a fabricated directive, a convincingly impersonated voice call can redirect funds, delay responses, or alter policy trajectories. Artificial intelligence has dramatically lowered the cost and increased the realism of such attacks.

What once required insider access or long-term espionage can now be attempted remotely, repeatedly, and adaptively. Failed attempts are not wasted; they train the system. Each interaction refines tone, vocabulary, timing, and psychological leverage.

Consumer messaging platforms, saturated with authentic leadership communication, provide the raw material that makes these attacks plausible.

Consumer Messaging Apps Were Never Designed for Sovereign Use

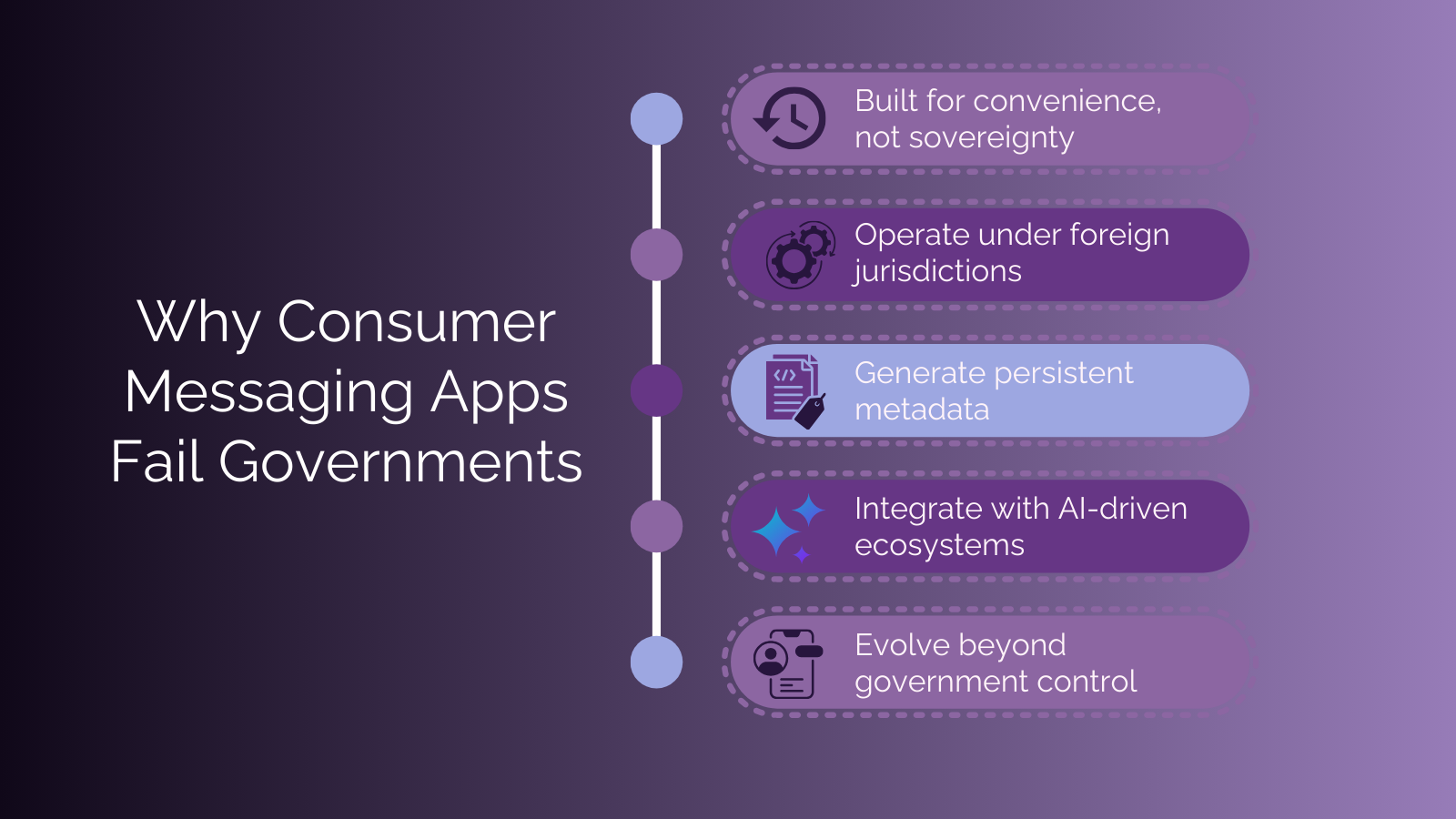

At this point, it becomes necessary to confront an uncomfortable truth: consumer messaging apps are not failing governments by accident. They are succeeding at what they were designed to do.

They are designed to maximize usability, connectivity, and data flow. They are embedded in cloud ecosystems optimized for analytics and AI enhancement. They operate across jurisdictions, subject to legal frameworks that prioritize corporate compliance over sovereign control. Their business models depend on metadata, behavioral insight, and continuous engagement.

None of this is malicious. But none of it is compatible with national security.

No amount of policy guidance or “appropriate use” training can change the fact that these platforms exist outside government control. They evolve according to commercial incentives, not strategic restraint. Features are added, integrations deepened, AI capabilities expanded — often without meaningful visibility for the institutions that depend on them.

For sovereign communication, this is not a configuration problem. It is a structural one.

Metadata: The Intelligence Layer Leaders Rarely See

One of the most persistent misconceptions among decision-makers is the belief that risk only arises when sensitive content is shared. This belief is understandable — and profoundly outdated.

In an AI-mediated intelligence environment, metadata is often more valuable than content. It reveals relationships, influence, urgency, and internal alignment. Over time, it exposes how decisions are actually made, rather than how organizations claim they are made.

Governments systematically underestimate this risk because metadata feels technical, diffuse, and unspectacular. There is no dramatic breach, no leaked document, no single moment that triggers alarm. Responsibility is fragmented across platforms, vendors, and departments. The damage is cumulative rather than episodic — which makes it easy to ignore until strategic advantage has already shifted.

An adversary does not need to know what was decided if they can reliably predict how a decision will be reached and when it will occur.

This is how strategic advantage is quietly eroded: not through leaks, but through inference.

The Smartphone Problem: When the Device Becomes the Attack Surface

The devices themselves deepen the problem. Modern smartphones are not passive tools. They are AI platforms in their own right, constantly processing voice, text, images, and behavioral signals. Many of these processes operate at the operating-system level, beyond the visibility or control of individual applications.

Cloud backups replicate data far beyond the original device. AI assistants analyze unencrypted inputs. System updates introduce new capabilities and dependencies without negotiation. Even secure enclaves generate side-channel signals that sophisticated analytics can exploit.

For governments, this means that communication security cannot be separated from architectural control. As long as leadership communication occurs on platforms and devices governed by external actors, sovereignty remains conditional.

What Is Really at Stake: Decision Integrity

What is ultimately at stake is not privacy. It is decision integrity.

Decision integrity means that national choices are formed freely, without manipulation, coercion, predictive pressure, or synthetic influence. It means leaders deliberate without knowing that their behavior is being modeled, anticipated, and gamed. It means strategy is shaped by intent, not by adversarial foresight.

Artificial intelligence threatens decision integrity not by attacking decisions directly, but by shaping the environment in which decisions are made. When leaders operate inside communication systems that feed adversarial modeling, autonomy becomes performative. The appearance of sovereignty remains, but its substance erodes.

Why Traditional Cybersecurity Is Failing Governments Here

Traditional cybersecurity frameworks are poorly equipped to address this reality. They focus on infrastructure, networks, and data breaches — necessary protections, but insufficient ones. They were built to defend systems, not decision processes.

Leadership communication sits above infrastructure. It precedes policy. It defines response. Yet it often remains outside formal security architectures, governed by convenience and habit rather than design.

This is why secure internal communication must now be understood as critical national infrastructure. It deserves the same rigor, oversight, and strategic intent as defense systems, intelligence platforms, and energy grids.

What follows from this recognition is not a new tool, but a fundamentally different approach to communication itself.

From Consumer Platforms to Sovereign Communication Architecture

Some governments have already begun to internalize this shift. They are moving away from borrowed communication ecosystems and toward sovereign architectures built specifically to protect leadership communication in the AI era. These architectures are defined not by features, but by control: control over software, jurisdiction, infrastructure, identity, cryptography, and data flow.

Once this reality is acknowledged, the question is no longer whether consumer platforms can be made safe enough. They cannot. The question becomes what a communication system would need to look like if it were designed explicitly to protect leadership decision-making against AI-driven modeling and manipulation.

This is the strategic context in which RealTyme operates.

RealTyme was not created to compete with consumer messaging apps on convenience or popularity. It was designed to address a problem those platforms cannot solve: how to protect leadership communication and decision integrity when artificial intelligence has become a strategic weapon.

By minimizing metadata, enforcing sovereign hosting, integrating hardware-backed cryptography, isolating leadership communication during critical moments, and ensuring that no data feeds external AI systems, RealTyme aligns communication security with national security realities. It treats communication not as a productivity tool, but as a strategic surface that must be defended by design.

The Quiet Battle That Will Define the AI Era

The hardest transition governments face in this domain is cultural. Consumer messaging apps feel effortless because they were designed to disappear. Sovereign systems feel deliberate because they were designed to endure pressure, scrutiny, and attack.

But national security has never been about ease.

In the age of AI, the cost of convenience is no longer abstract, but a strategic exposure. Governments that delay this reckoning will not fail dramatically. They will fail quietly — through predictable decisions, weakened negotiating positions, and influence they never see.

Final Thought

The defining contests of the AI era will not always be visible. They will not announce themselves as cyber incidents. They will unfold in conversations, in timing, in hesitation, in anticipation.

They will be decided in the communication layer.

The strongest states will not be those with the most advanced artificial intelligence. They will be those whose decisions cannot be modeled, intercepted, or manipulated.

And that strength begins with secure internal communication in government.

In the age of AI, sovereignty is no longer defended only at borders or networks — it is defended in conversations, long before decisions are announced.